Künstliche Intelligenz hat die Softwareentwicklung bereits in ihren Grundzügen verändert – und viele beobachten diesen Trend mit Skepsis. Ist das wirklich relevant? Oder nur der nächste Hype, der bald wieder verpufft? Tatsächlich ist KI mehr als ein nettes Gimmick: Erste Werkzeuge zeigen schon heute, wie sich einfache Anforderungen schnell, effizient und größtenteils zuverlässig umsetzen lassen. Klar müssen die Prompts von Entwicklern erstellt werden um die richtigen Ergebnisse zu erzielen. Aber oft braucht es nur noch rund 20 Prozent Ihrer Fähigkeiten. Was heißt das? Prozesse lassen sich beschleunigen, repetitive Aufgaben abgeben – und das Potenzial ist noch lange nicht ausgeschöpft.

Der bekannte Denker Günther Dück sprach einst von der „Hybris-vs.-Hype“-Kurve: Zuerst wird eine neue Technologie überhyped, dann folgt das Tal der Ernüchterung. Genau dort, wo echte Produktivität entsteht, treten oft die Skeptiker auf den Plan: „Ich hab’s doch gesagt, das bringt alles nichts.“ Doch gerade an diesem Punkt zeigt sich, wer den nächsten Schritt geht – und wer zurückbleibt.

Denn auch wenn KI nicht perfekt ist, ist sie bereits hoch relevant. Perfektion ist keine Voraussetzung für Nutzen. Wer sie heute einsetzt, automatisiert Prozesse, beschleunigt Workflows und schafft Raum für Kreativität. Wer sie ignoriert, bleibt zurück.

Es geht nicht darum, KI zu verklären. Sie wird nicht jeden Menschen ersetzen und auch nicht jede Software automatisch besser machen. Aber sie ist ein mächtiges Werkzeug – und in der Softwareentwicklung in den richtigen Händen ein echter Produktivitäts-Booster. Der Unterschied liegt in der Herangehensweise: Wer technische Kompetenz mit strategischem Denken kombiniert, wer seine Rolle im Entwicklungsprozess anpasst und erkennt, welche Aufgaben sich sinnvoll an die Werkzeuge delegieren lassen, kann mit KI gezielt Softwareprojekte beschleunigen, sogar die Qualität erhöhen und neue Potenziale heben.

Ich erinnere mich gut an die We Are Developers Konferenz 2023 in Berlin. Damals präsentierte Thomas Dohmke, CEO von GitHub, GitHub Copilot. Mein erster Gedanke: “Ganz nett, für kleinere Funktionen sicher hilfreich.” Revolutionär? Wohl kaum. Doch mit der Zeit wurde klar: Das ist kein besserer Code-Vervollständiger, das ist eine neue Art zu arbeiten. Heute sehe ich, wie Tools selbstständig API-Debugging durchführen, Datenbankabfragen erzeugen oder Migrationsskripte vorbereiten – ohne menschliche Hilfe. Das ist mehr als Automatisierung. Das ist ein Paradigmenwechsel.

Unsere Rolle als Entwickler verändert sich. Wir geben der Maschine Aufgaben, denken in Zielen, nicht mehr in jedem einzelnen Befehl. KI wird zum verlässlichen Partner, der rund um die Uhr unterstützt. Wer das versteht, nutzt KI nicht als Gimmick, sondern als strategisches Werkzeug.

Wie also anfangen? Ganz einfach: Tools ausprobieren. Frei verfügbare Versionen testen oder auch mal 10, 20 Euro in einen Test-Monat investieren. Mit privaten Projekten starten, nicht selbst überlegen, sondern dem KI-Werkzeug die Aufgabe geben, Erfahrungen sammeln. Es muss nicht perfekt sein – entscheidend ist, zu lernen. Ebenso, wie ich ein neues Framework oder eine Sprache lerne.

Wie bleibt man auf dem laufenden? Es gibt eine Flut an Informationen. Aber niemand muss alles mitbekommen. Es reicht, ein paar gezielte Quellen zu verfolgen – sei es ein YouTube-Kanal, ein LinkedIn-Newsletter oder ein Blog. Schritt für Schritt entsteht so eine Routine, wie beim Lernen eines neuen Frameworks. Kleine Projekte, Tests, Erkenntnisse. Und plötzlich wird klar, wie viel möglich ist.

Das Neue ist da. Noch nicht perfekt, aber mächtig. Es wächst. Und es wird bleiben.

Jetzt ist der Moment, sich damit auseinanderzusetzen. Jetzt ist die Zeit, zu lernen.

Denn wer heute beginnt, profitiert morgen. Wer aber weiter auf der Schreibmaschine tippt, während andere schon Word und KI nutzen, wird den Anschluss verlieren.

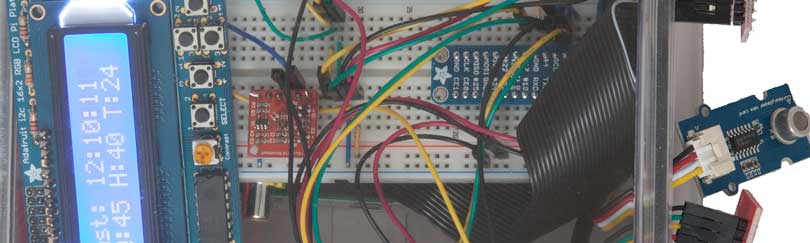

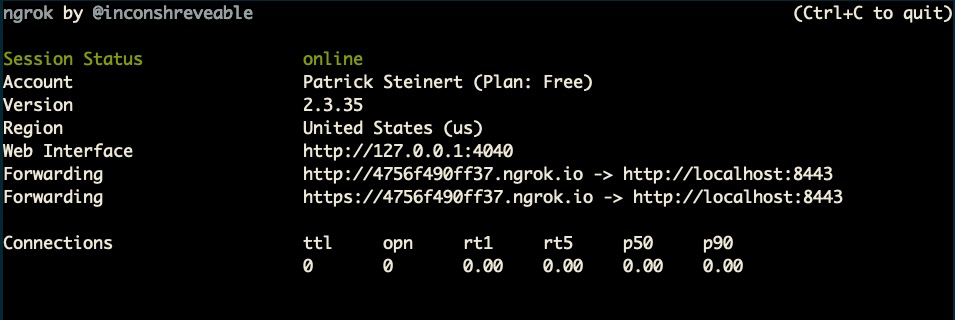

Eigentlich hätte ich gestern auf der buildingIoT Konferenz meinen Talk zu “IoT Analytics – Stream und Batch-Processing” gehalten. Nun ja, es sollte nicht sein. Daher habe ich meine Takeaways hier zusammengefasst.

Eigentlich hätte ich gestern auf der buildingIoT Konferenz meinen Talk zu “IoT Analytics – Stream und Batch-Processing” gehalten. Nun ja, es sollte nicht sein. Daher habe ich meine Takeaways hier zusammengefasst.